1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

| import torch

import torch.nn as nn

import torch.nn.functional as F

from torchvision import datasets, transforms

import torchvision

from torch.autograd import Variable

import torch.optim as optim

import matplotlib.pyplot as plt

import sys

BATCH_SIZE = 200 #每单次训练时加载的数据量,如果是用GPU跑的话,可以设置得高一点。

EPOCHS = 5 # 总共训练批次

DEVICE = torch.device("cuda" if torch.cuda.is_available() else "cpu") # 让torch判断是否使用GPU,建议使用GPU环境,因为会快很多

train_loader = torch.utils.data.DataLoader(

datasets.FashionMNIST('../datasets/data', train=True, download=True,

transform=transforms.Compose([

transforms.ToTensor(),

transforms.Normalize(mean=[0.5],std=[0.5]) # transforms.Normalize()将数据进行归一化处理,

])),

batch_size=BATCH_SIZE, shuffle=True)

test_loader = torch.utils.data.DataLoader(

datasets.FashionMNIST('../datasets/data', train=False, transform=transforms.Compose([

transforms.ToTensor(),

transforms.Normalize(mean=[0.5],std=[0.5])

])),

batch_size=BATCH_SIZE, shuffle=True)

# Class labels

classes = ('T恤', '牛仔裤', '毛衣', '裙子', '外套',

'凉鞋', '衬衫', '运动鞋', '包', '短靴')

# 网络结构 conv->Relu->pool->conv->Relu->pool->Linear->Relu->Linear->Sofrmax

class MNISTConvNet(nn.Module):

def __init__(self):

super().__init__()

# 公式:OH=(H + 2P - FH)/S + 1; OW= (W + 2P - FW)/S + 1

self.conv1 = nn.Conv2d(in_channels=1, out_channels=24, kernel_size=3, stride=1, padding=0) # 12, 26*26

self.conv2 = nn.Conv2d(in_channels=24, out_channels=48, kernel_size=3, stride=1, padding=0) # 24, 24*24

self.conv3 = nn.Conv2d(in_channels=48, out_channels=96, kernel_size=3, stride=1, padding=0) # 48, 10*10

self.fc1 = nn.Linear(in_features=96*5*5, out_features=400)

self.fc2 = nn.Linear(in_features=400, out_features=10)

def forward(self, x):

out = self.conv1(x)

out = F.relu(out)

# out = F.max_pool2d(input=out, kernel_size=(2,2), stride=2) #12 13*13

out = self.conv2(out)

out = F.relu(out)

out = F.max_pool2d(input=out, kernel_size=(2,2), stride=2) #24 12*12

out = self.conv3(out)

out = F.relu(out)

out = F.max_pool2d(input=out, kernel_size=(2,2), stride=2) #48 5*5

out = out.view(-1,96*5*5)

out = self.fc1(out)

out = F.relu(out)

out = self.fc2(out)

out = F.log_softmax(out, dim=1)

return out

def train(model, optimizer, epoch):

model.train() #设置模型为训练模式

train_loss = 0

correct = 0

for batch_id, (images, labels) in enumerate(train_loader):

images = images.to(DEVICE) # 将tensors移动到配置的device

labels = labels.to(DEVICE)

optimizer.zero_grad() # 在反向传播的时候先把梯度记录清0

output = model(images)

loss = F.nll_loss(output, labels)

loss.backward()

optimizer.step() # 调用step()方法更新梯度参数

if (batch_id + 1) % 30 == 0:

print(f'Train Epoch: {epoch} [{batch_id * len(images)}/{len(train_loader.dataset)} ({ 100. * batch_id / len(train_loader):.0f}%)]\tLoss: {loss.item()}')

train_loss += loss.item()

# print(f'train loss: {loss.item()}, train_loss_sum: {train_loss}')

pred = output.max(1, keepdim=True)[1] # 找到概率最大的下标

correct += pred.eq(labels.view_as(pred)).sum().item()

return 100. * train_loss / len(train_loader.dataset), 100. * correct / len(train_loader.dataset)

def test(model):

model.eval()

test_loss = 0

correct = 0

with torch.no_grad(): # 禁用梯度计算,验证模式一般不需要进行梯度更新及反向传播,所以不需要更新梯度参数

for images, labels in test_loader:

images, labels = images.to(DEVICE), labels.to(DEVICE)

output = model(images)

test_loss += F.nll_loss(output, labels, reduction='sum').item() # 将一批的损失相加

pred = output.max(1, keepdim=True)[1] # 找到概率最大的下标

correct += pred.eq(labels.view_as(pred)).sum().item()

test_loss /= len(test_loader.dataset)

print(f'\nTest set: Average loss: {test_loss:.4f}, Accuracy: {correct}/{len(test_loader.dataset)} ({100. * correct / len(test_loader.dataset)}%)\n')

return test_loss, 100. * correct / len(test_loader.dataset)

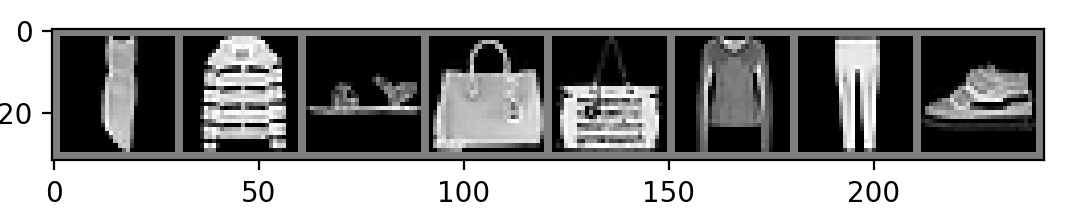

def predict(model):

val_loader = torch.utils.data.DataLoader(

datasets.FashionMNIST('../datasets/data', train=False, transform=transforms.Compose([

transforms.ToTensor(),

transforms.Normalize(mean=[0.5],std=[0.5])

])),batch_size=8, shuffle=True)

iterator = iter(val_loader)#获得Iterator对象:

X_val, Y_val = next(iterator) #循环Iterator对象迭代器,一般和上面的iter()一起使用

inputs = Variable(X_val)

#Variable()函数用于将一个张量转换为可训练的变量(也称为梯度变量)。使用Variable()函数可以方便地更新神经网络中的参数,从而实现反向传播算法。

pred = model(inputs)

_,pred = torch.max(pred, 1) #找到 tensor里最大的值,torch.max(input, dim),input是softmax函数输出的一个tensor。 dim是max函数索引的维度0/1,0是每列的最大值,1是每行的最大值

print("实际结果:",[classes[i] for i in Y_val])

print("推理结果:", [ classes[i] for i in pred.data])

img = torchvision.utils.make_grid(X_val)

img = img.numpy().transpose(1,2,0)

std = [0.5,0.5,0.5] #标准差,用于计算标准化后的每个通道的值。

mean = [0.5,0.5,0.5] #均值,用于计算标准化后的每个通道的值。

img = img*std+mean

plt.imshow(img)

plt.show()

def run_train():

model = MNISTConvNet().to(DEVICE)

optimizer = optim.Adam(model.parameters()) #Adam方法更新权重参数

# optimizer = optim.SGD(model.parameters(), lr=1e-1, momentum = 0.9) #SGD方法更新权重参数,学习率设置大一点,过小梯度下降过慢

train_loss_list, test_loss_list = [],[]

train_acc_list, test_acc_list = [],[]

for epoch in range(1, EPOCHS + 1):

train_loss, train_acc = train(model, optimizer, epoch)

#torch.save(model.state_dict(), 'fashion_mnist_model.pkl') #保存模型

test_loss, test_acc = test(model)

train_acc_list.append(train_acc)

test_acc_list.append(test_acc)

train_loss_list.append(train_loss)

test_loss_list.append(test_loss)

print(f'Epoch: {epoch} Train Loss: {train_loss:.4f}, Train Acc.: {train_acc:.2f}% | Validation Loss: {test_loss:.4f}, Validation Acc.: {test_acc:.2f}%')

plt.plot(range(1, EPOCHS+1), train_loss_list, label='Training loss')

plt.plot(range(1, EPOCHS+1), test_loss_list, label='Validation loss')

plt.legend(loc='upper right')

plt.ylabel('Cross entropy')

plt.xlabel('Epoch')

plt.show()

def run_predict():

model = MNISTConvNet().to(DEVICE)

model.load_state_dict(torch.load('fashion_mnist_model.pkl')) #加载之前训练好的模型

predict(model)

def show_image():

train_loader = torch.utils.data.DataLoader(

datasets.FashionMNIST('../datasets/data', train=True, download=True,

transform=transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.1307,), (0.3081,)) # transforms.Normalize()将数据进行归一化处理,

])),

batch_size=64, shuffle=True)

X_train, Y_train = next(iter(train_loader)) #循环Iterator对象迭代器,一般和上面的iter()一起使用

print("Image Label is:",[i for i in Y_train])

img = torchvision.utils.make_grid(X_train)

img = img.numpy().transpose(1,2,0)

std = [0.5,0.5,0.5] #标准差,用于计算标准化后的每个通道的值。

mean = [0.5,0.5,0.5] #均值,用于计算标准化后的每个通道的值。

img = img*std+mean

plt.imshow(img)

plt.show()

if __name__ == '__main__':

if len(sys.argv) <= 1:

print("请输入参数:train|predict|img") #train:表示训练数据,predict#表示推理,#img只是单纯地显示一下图片

else:

action = sys.argv[1]

if action == "train":

run_train() # 用于训练数据

elif action == "predict":

print("-----")

run_predict() # 用于预测图片

elif action == "img":

show_image() # 只是简单地显示图片

else:

print("请输入参数:train|predict|img")

|